# 1. Environment Preparation

First, please ensure that your system meets the following basic requirements:

- Operating System: Supports Linux (such as Ubuntu 20.04 LTS) or other Unix-like operating systems.

- Development Toolkit: Install the necessary build tools and libraries, such as

build-essential,libnuma-dev,pkg-config, etc. - Kernel version: For optimal performance, it is recommended to use the latest stable version of the kernel.

- Hardware environment or virtual environment that requires RDMA support.

| |

# 2. Compile and Install UCX 1.15.0

- Download the UCX source package:

| |

- Configure UCX compile options:

| |

You can add more configuration options according to actual needs, such as specifying a specific network card type or enabling specific features.

- Compile and install:

| |

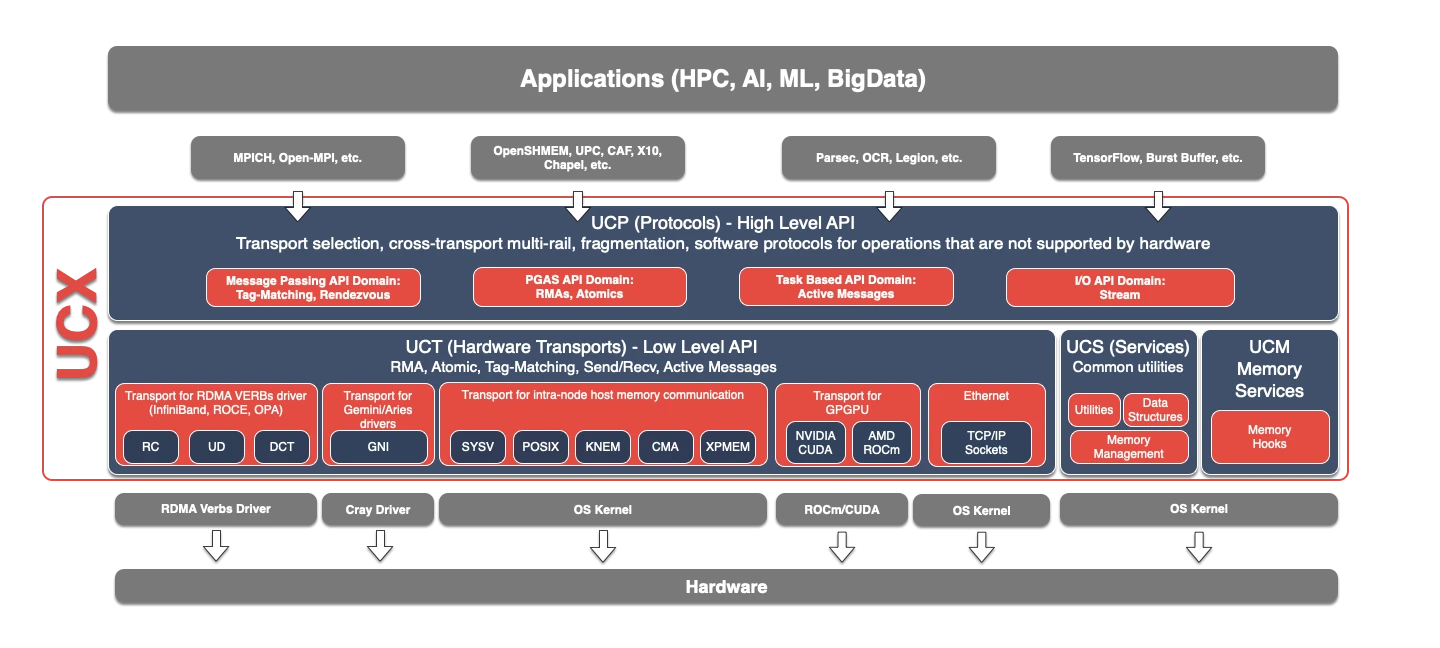

- UCX Architecture Description

- The architecture of UCX 1.15.0 is shown in the figure below:

| Component | Role | Description |

|---|---|---|

| UCP | Protocol | Implements advanced abstractions, such as tag matching, streams, connection negotiation and establishment, multi-track, and handling different types of memory. |

| UCT | Transport | Implements low-level communication primitives, such as active messages, remote memory access, and atomic operations. |

| UCM | Memory | A collection of general data structures, algorithms, and system utilities. |

| UCP | Protocol | Intercept memory allocation and release events used by memory registration cache. |

# 3. Compile and Install OpenMPI 5.0.0

- Download the OpenMPI source package:

| |

- Configure OpenMPI compile options, specifying the use of UCX as the transport layer:

| |

Note

- For OpenMPI 4.0 and later versions, there may be compilation errors with the

btl_uctcomponent. This component is not important for using UCX; therefore, it can be disabled with--enable-mca-no-build=btl-uct:- The

--enable-python-bindingsoption is used to enable Python bindings.- The

--enable-mpirun-prefix-by-defaultoption is used to automatically add the--prefixoption when starting an MPI program withmpirun.- The

--without-hcolloption is used to disable the HCOLL component. If not set during compilation, it will report errorscannot find -lnumaandcannot find -ludev.

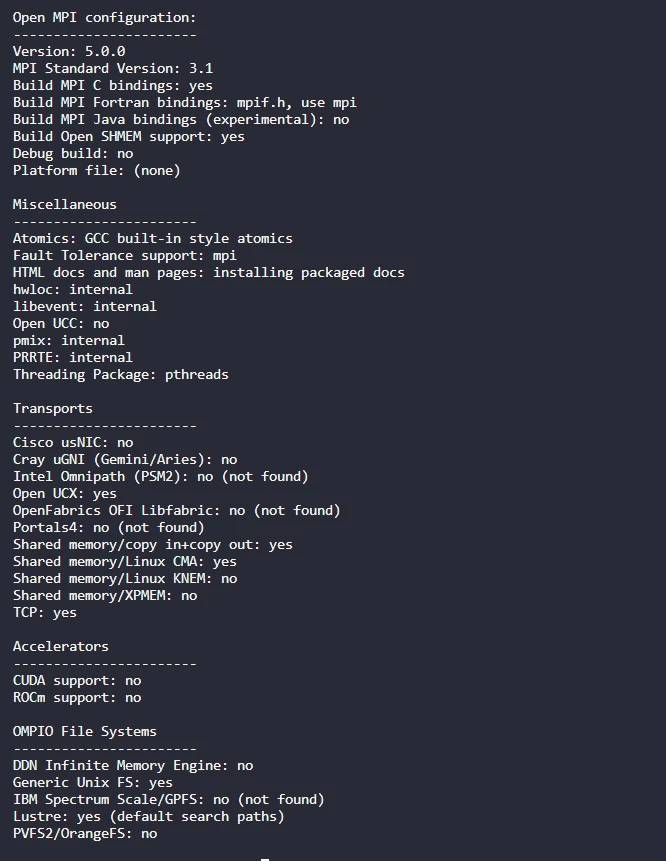

The final configuration options are as follows:

- Compile and install:

| |

# 4. Verify Installation and Set Environment Variables

After installation, you can verify whether UCX and OpenMPI have been successfully integrated by running a simple MPI program:

| |

(If running as root, you need to add the --allow-run-as-root option. If there is an RDMA device, you can set -x UCX_NET_DEVICES)

Note

If you need to use it with

Slurm, you can refer to Launching with SlurmOne way is to first allocate resources through

salloc, and then run thempiruncommand on the allocated resources. At this time,--hostfile,--host,-n, etc. do not need to be set, for example:

| |

If everything is normal, you will see the output of the two hostnames. For convenience, you can add the OpenMPI bin directory and others to the system PATH environment variable:

| |

# 5. UCX Performance Testing

Sender:

| |

Recipient:

| |

In summary, through the above steps, we have successfully compiled and installed UCX 1.15.0 and OpenMPI 5.0.0 from the source code, and integrated them into an efficient and stable high-performance computing environment. In practical applications, you can further optimize the configuration according to specific needs to achieve better performance.